🏆 ClueWeb-Reco Leaderboard

Candidate Ranking Results

| Model | Recall@10 ▼ | NDCG@10 | Recall@50 | NDCG@50 | Recall@100 | NDCG@100 |

|---|---|---|---|---|---|---|

| DeepSeek-V3-QueryGen | 0.0127 | 0.0082 | 0.0264 | 0.0111 | 0.0371 | 0.0129 |

| GPT-4.1-QueryGen | 0.0107 | 0.0050 | 0.0195 | 0.0068 | 0.0254 | 0.0077 |

| HLLM | 0.0088 | 0.0041 | 0.0137 | 0.0052 | 0.0176 | 0.0059 |

| GPT-3.5-Turbo-QueryGen | 0.0088 | 0.0027 | 0.0176 | 0.0050 | 0.0312 | 0.0072 |

| Qwen3-235B-QueryGen | 0.0088 | 0.0046 | 0.0234 | 0.0077 | 0.0303 | 0.0088 |

| GPT-4o-QueryGen | 0.0068 | 0.0042 | 0.0146 | 0.0058 | 0.0264 | 0.0077 |

| Gemini-2.5-Flash-QueryGen | 0.0068 | 0.0042 | 0.0146 | 0.0058 | 0.0264 | 0.0077 |

| Claude-Sonnet-4-QueryGen | 0.0068 | 0.0032 | 0.0166 | 0.0052 | 0.0215 | 0.0060 |

| Kimi-K2-QueryGen | 0.0039 | 0.0022 | 0.0156 | 0.0050 | 0.0234 | 0.0062 |

| TASTE | 0.0020 | 0.0015 | 0.0039 | 0.0019 | 0.0039 | 0.0019 |

Prompt Construction for Query Generation

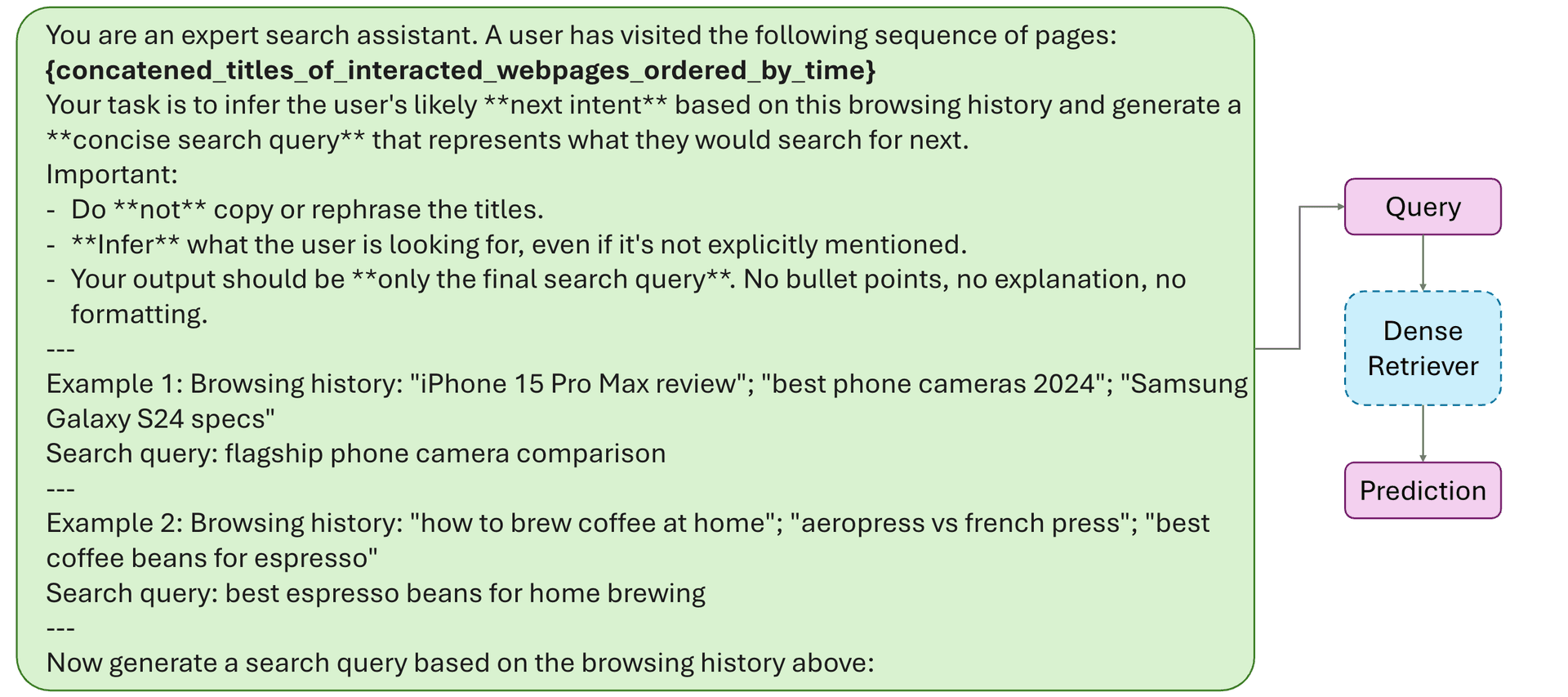

To assess the generalization power of LLM-based recommenders, ClueWeb-Reco includes a query generation task. Browsing history titles are formatted into a prompt, and LLMs are asked to infer the next likely interest without rephrasing. The generated query is then embedded and matched to the candidate pool via dense retrieval.

Note: Prompt design plays a critical role in the performance of LLM-QueryGen models. ORBIT encourages community contributions of custom prompts to better reflect the strengths and nuances of each language model.